PeerTube stress tests: resilience lies in your peers!

We have many admins or uploaders wondering how many concurrent viewers a PeerTube instance can handle and how much its P2P feature can help the server to broadcast a live/video.

It's the reason why, with the support of NLnet and Octopuce, we have been running a real world stress tests on a regular PeerTube instance. Of course with the aim of optimizing the software, but first and foremost to be able to confidently give the number of concurrent viewers a PeerTube instance should be able to handle and understand how the P2P behaves in such conditions.

We chose to simulate 1,000 viewers as it is a symbolic number, but also because it covers 99% of the streams broadcasted on Twitch in 2022. PeerTube would then be able to technically handle 99% of these use cases, which is a large number of users ;)

If you would rather skip the technical stuff, you can jump directly to the conclusion.

Setup the benchmark

To get closer to real life testing, we decided to run 1,000 Chrome web browsers watching the same video, each one having a dedicated public IP to properly simulate actual real viewers.

In order to run 1,000 real Chrome web browsers, we decided to create a Selenium grid and to spawn 1,000 Selenium nodes using Docker. This way, each node can have its own IPv6 public address. Luc, the amazing sysadmin at Framasoft, developed scripts to automatically generate this Selenium grid using Hetzner cloud. His work can be found on https://framagit.org/framasoft/peertube/selenium-stack.

After the first conclusive tests where we reached 500 web browsers, we encountered difficulties with Hetzner cloud as they refused to increase our VPS quota to a number that would have allowed us to seamlessly run the Selenium grid with 1,000 web browsers. After spending several days trying to find alternatives, Octopuce, a French hosting company that hosts several PeerTube instances, offered us to use a powerful server to help us reach our goal of 1,000 web browsers. Shout out to them!

We have made several performance improvements in PeerTube core to reach 1,000 viewers. Some of them are already available in PeerTube V6 like federation and view events optimizations. Some others will be available in the next release (V6.1): ability to customize views/playback events interval, new viewer federation protocol to send much less messages etc.

Once the Selenium grid is ready and the PeerTube instance is updated to include the above performance improvements, we can spawn 1,000 web browsers to load a video on https://peertube2.cpy.re/ (our nightly updated PeerTube instance) using WebdriverIO. Each automated web browser is programmed to load the video watch page, play the video, and wait there until the test ends.

Benchmark conditions

The test PeerTube instance that has been installed following the official installation guide on Debian 12.2 with nginx, PostgreSQL and Redis on the same machine.

Hardware specifications:

- 4 vCore of i7-8700 CPU @ 3.20GHz

- Has a hard drive (not a SSD)

- 4Go of RAM

- 1Gbit/s network

The important PeerTube instance settings:

- The chat plugin is not enabled

- Logs are in warning mode to reduce logging overhead

- Client logs are enabled

- Metrics are enabled but HTTP request duration metrics are disabled

- Viewers federation V2 is enabled (feature behind a feature flag that we plan to enable in PeerTube 6.2)

- Object Storage (S3) is not enabled

Benchmarked videos/lives are public, so static files are directly served by nginx.

The Chrome web browser has its network speed limited using:

browser.setNetworkConditions({

offline: false,

download_throughput: 2000 / 8 * 1024, // 2000kbit/s,

upload_throughput: 300 / 8 * 1024, // 300kbit/s

latency: 500

})

Unfortunately, network conditions don't apply on WebRTC so we were unable to limit P2P uploads/downloads. These settings apply only on HTTP requests.

Benchmark results

We ran stress tests on 4 scenarios:

- A live video with Normal Latency setting

- A live video with High Latency setting

- A live with High Latency setting where half of the viewers had P2P disabled

- A regular VOD video

Live videos provide only one resolution with a bitrate of 650kbit/s, while the VOD video provides 4 resolutions, with the highest one having a bitrate of 1.2Mbit/s.

Here are the results of our 4 scenarios where 1,000 viewers are connecting on the live/video within a few minutes.

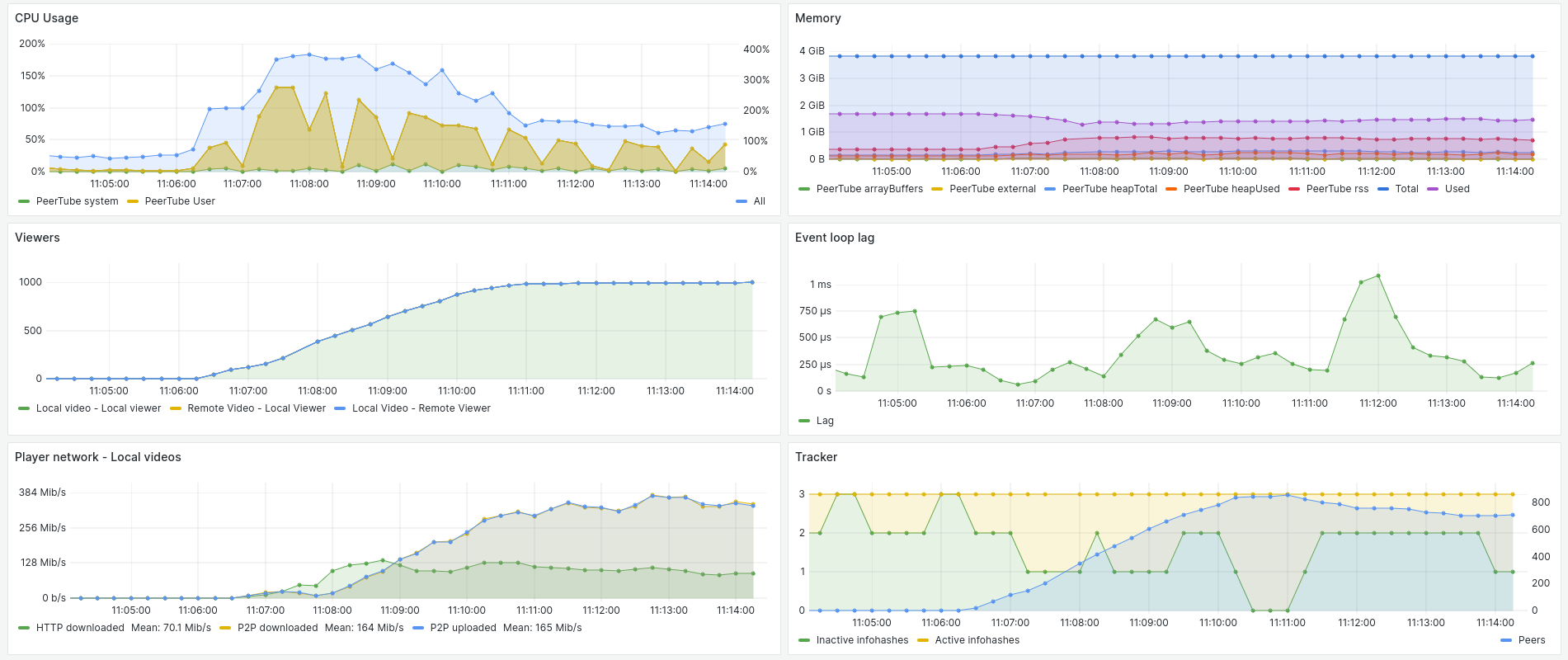

Live with a normal latency

Using the default live settings and so a latency of ~30 seconds, we can see that PeerTube CPU usage peaks as web browsers load the video (11:08:00) and tapers off as viewers watch the video (11:11:00). The main job of PeerTube at that time is to handle playback metrics and view events coming from web browsers for statistics and federation. RAM consumption and NodeJS event loop lag remain stable.

Most viewers download the video using HTTP when they load the page to buffer the live segments, and progressively try to download more distant segments using P2P. It's the reason why we see an HTTP download peak of 150Mbit/s at the beginning of the graph (11:08:00) which gradually drops to 90Mbit/s (11:12:00). At this point, web browsers mainly exchange live segments using P2P at up to 370Mbit/s. Under optimal conditions, the P2P aspect of PeerTube reduces the bandwidth required to broadcast a live video by a factor of 3 or 4, which corroborates feedback received from some PeerTube admins.

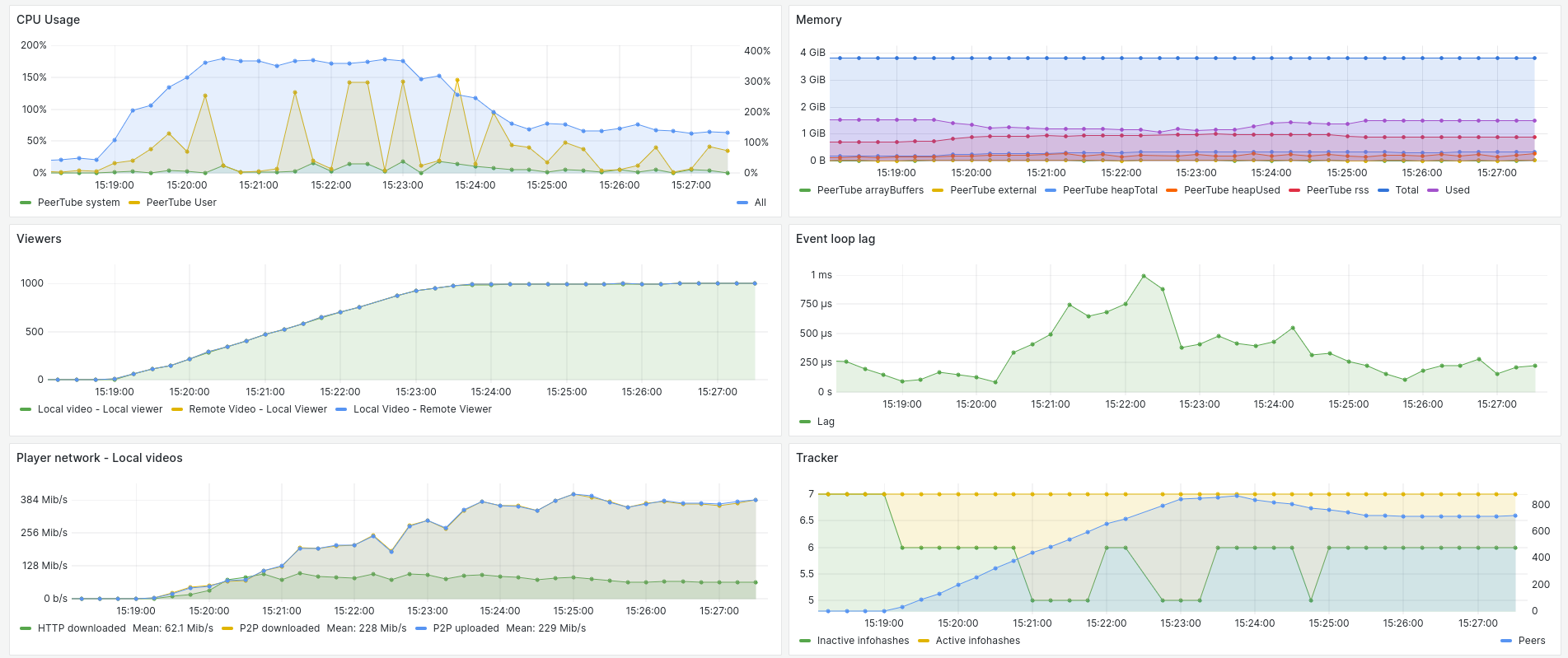

Live with a high latency

We wanted to test a live with High latency setting set (~60 seconds) so web browsers have more time to download live segments.

We now have a ratio of 65Mbit/s for HTTP and 370Mbit/s for P2P (15:25:00). A nice improvement, but we think we can improve the HTTP/P2P ratio in the future by changing some P2P engine settings.

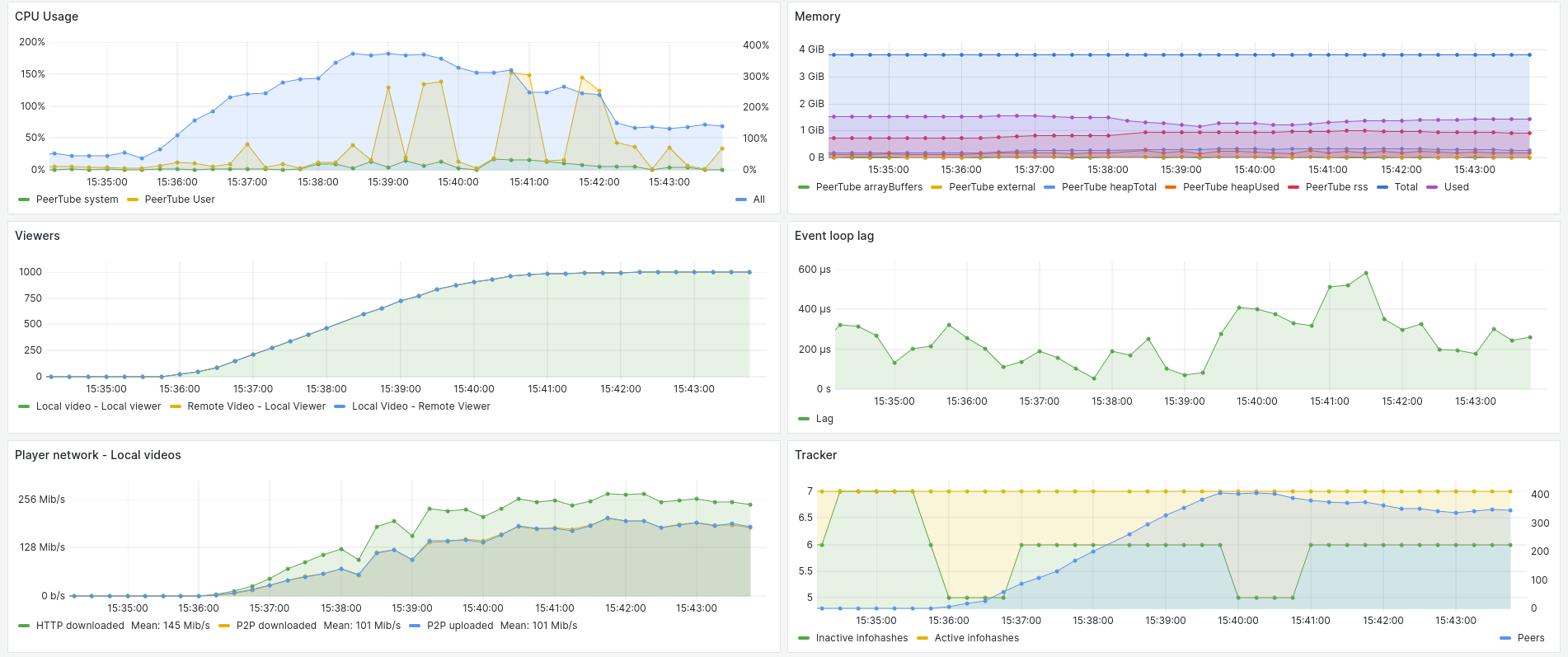

Live with a high latency and half of the viewers with P2P disabled

This scenario tries to mimic "real world" viewers by disabling P2P for half of them.

We have a ratio of 260Mbit/s for HTTP and 190Mbit/s for P2P (15:42:00). The swarm of 500 P2P-enabled viewers exchange segments with the same ratio as the live with a high latency, while viewers with P2P disabled just download segments from the server.

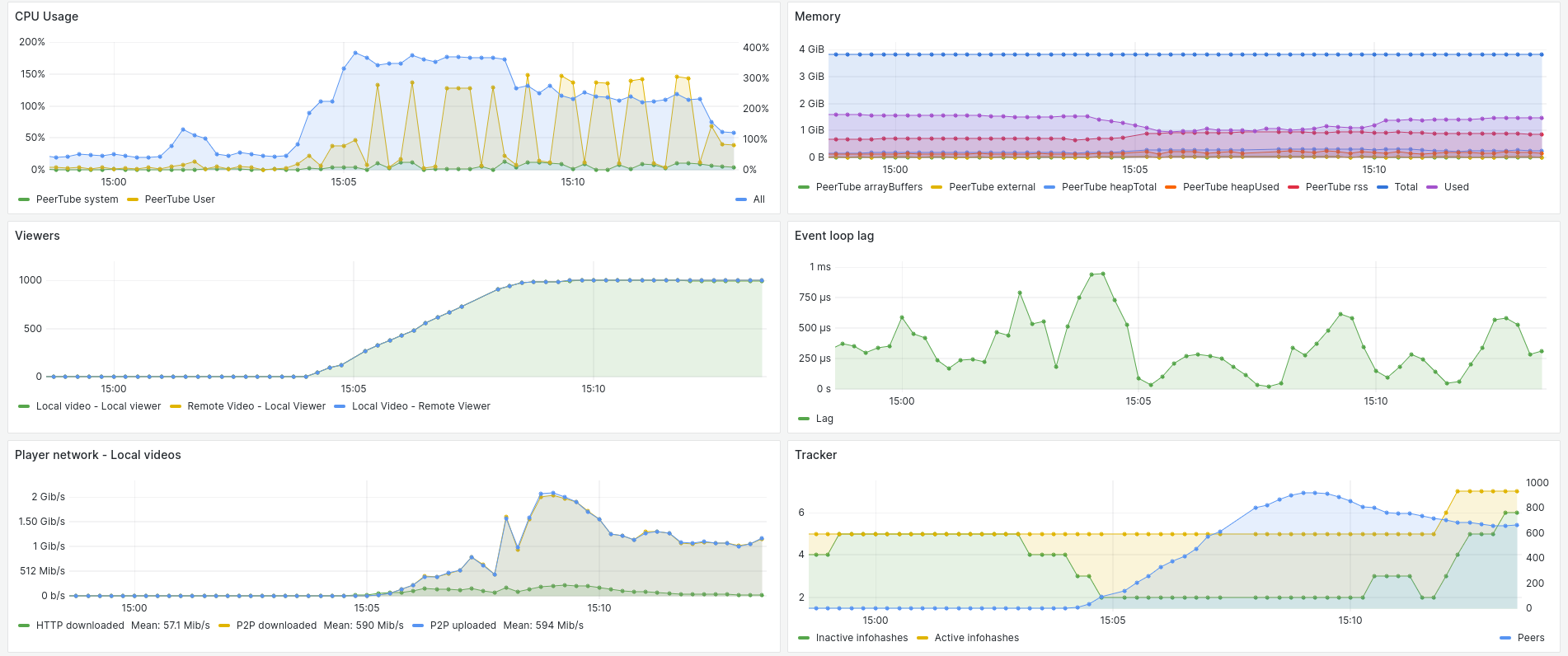

VOD video

It's interesting to focus on lives and analyze their P2P ratio since viewers are simultaneously watching and sharing the same segments. But we can also imagine use cases when a VOD video becomes viral:

Bandwidth consumption is much higher than for a live because the video bitrate is higher, but also because the web browser buffers much more the video, especially if P2P segments are available. This is the reason why we have a peak of 2,000Mbit/s for P2P at the beginning, gradually dropping to 1,150Mbit/s (15:10).

If we zoom on the HTTP graph:

We observe that web browsers download nearby segments using HTTP to avoid playback problems, and then try to download distant segments using P2P. That's why we have a peak of 200Mbit/s for HTTP download at the beginning of the graph (15:09:00).

After a few minutes the P2P/HTTP ratio becomes very big with 1150Mbit/s for P2P and 25Mbit/s for HTTP (15:14:00). It means P2P works very well on VOD videos when viewers are watching the same parts of the video. It's an expected behaviour since we have more time to exchange and buffer distant video segments using P2P.

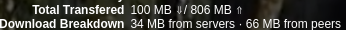

As a bonus, here is the screen of my personal web browser during the stress test where we can see that in an optimal situation and a fiber broadband connection, "regular" viewers can have a very big P2P ratio (800MB uploaded/100MB downloaded).

Technical Overview

Here is an overview of P2P ratio of the live with normal latency and the VOD video with 1,000 viewers:

| HTTP peak | HTTP after 5 minutes | P2P after 5 minutes | HTTP/P2P ratio after 5 minutes | |

|---|---|---|---|---|

| Live | 150 Mbit/s | 90 Mbit/s | 350 Mbit/s | 25% (P2P saves 75% of bandwidth) |

| VOD | 200 Mbit/s | 25 Mbit/s | 1150 Mbit/s | 2% (P2P saves 98% of bandwidth) |

We consider these values hold in optimal conditions, since our simulated web browsers had a fast internet connection for P2P that we could not limit. But in our experience, they seem to represent what happens in real life.

Conclusion and further work

With these results we can see that a regular PeerTube website (server rental at around 20$/month) can correctly handle 1,000 concurrent viewers if the administrator follows our scalability guide.

It means that PeerTube, a Free-Libre software funded by grassroots donations and grants from the NGI programs, that has been developed over 6 years by benevolent contributors and one paid developer, offers an affordable, resilient, efficient and solid alternative to tech giants' technology. It might be hard to realize but it is true: together, we made it this far.

Even if handling 1,000 concurrent viewers is a nice achievement, PeerTube can still go further with extra configuration.

We also have a few ideas on how to handle even more simultaneous viewers in the future:

- Optimize the settings of our P2P engine for when "High latency" is set for lives

- Add PeerTube configuration to specify external P2P trackers more suited to handle more peers

- Distribute the work to handle views events on multiple machines

- Lazy load some components in the client (like the comments section) to avoid making HTTP requests if the components are not in the web browser viewport

Thanks for reading and don't hesitate to share your experiences with PeerTube and/or limits you encountered, we'd be happy to work on them. Also, don't forget to support our work if you can, and share the good news!